Giants all the way down

Just after the frenetic sprint leading up to a conference deadline, I often end up chastising myself for not being more single-focus in my research. This weekend, while recovering from the ICML deadline, I found myself replaying the same familiar thought patterns: “I want to work deeply on only a couple of projects”, then “What would those be now that the slate is clean”, and finally, realizing that I have listed a half-dozen projects that should start immediately! It's a great problem to have, but to try and break up the pattern a bit I wanted to think some more about what it means to do impactful work.

About a year ago, Nature published a paper whose takeaway is boldly proclaimed by the title “Large teams develop and small teams disrupt science and technology” (Wu, Wang, Evans 2019). They come to this conclusion by analyzing the citation graph for a large number of papers, patents, and software projects. This notion of development versus disruption gets to the heart of what I think of as incremental versus impactful work. That said, the paper is careful not to say as much, insisting instead that both forms can be highly impactful. The authors give a definition of disruption which is very open to attack, but still interesting so I'll say disruption in italics as a wink and nod that this isn't really disruption. Instead, the disruption of a paper is measured as

$$ D = \frac{n_i - n_j}{n_i + n_j + n_k}, $$

where $n_i$ denote number of references in that paper that are not cited by any papers that subsequently cite the disruptive paper, $n_j$ are the number of references which are subsequently cited by those papers, and finally $n_k$ are the number of papers cited by follow-on papers that were not cited by the disruptive paper. I like to think of this in terms of cuts on a graph, or bottlenecks (i.e. disruptive papers disconnect the graph when cut). Intuitively, a highly disruptive paper is the principle source for future papers that cite it, and developmental paper is one which is more of a footnote among many other citations.

Suffice it to say that the authors’ results are hard to argue against (if you accept their notion of disruption). What does this mean for us? As AI and machine learning continue to grow in popular interest, the size of research groups both inside and outside of academia have grown substantially. Part of the cause of the effect, Wu et al. speculate, is that

large teams demand an ongoing stream of funding and success […] makes them more sensitive to the loss of reputation and support that comes from failure. […]

smaller groups with more to gain and less to lose are more likely to undertake new and untested opportunities

I suspect that what we are seeing here is a combination of at least two things. First, the above, which could be framed as a sort of innovator's dilemma within large research groups themselves. Second, a sort of reversion to the mean as collaborations grow larger. What I mean by this second one is that collaborations, by their nature, involve a combination of multiple views and when the number being combined grows, so does the tendency to the pre-existing collective wisdom (the exact opposite of disruptive).

Credit assignment and disruption

In research, credit assignment (in the academic sense as opposed to in terms of learning) comes up in a lot of informal conversations. Partly, it is an evergreen issue that comes with the nature of research careers, which are largely (if not entirely) built upon one's reputation for good work. Recently it has been amplified by voices, Jürgen Schmidhuber's in particular, arguing that the field of artificial intelligence (AI) and machine learning (ML) have become highly miopic in their attribution of credit to previous works.

To some degree, I think they are correct. I'm fond of the phrase ‘standing on the shoulders of giants,’ and to some degree we (as a field) are quite near-sighted when looking down at the tower of work beneath our feet. Believe it or not, the above paper has something quite concrete to say about this.

One of the results from the Wu et al. paper was that disruptive papers tend to cite older and less-popular works. This clearly is not Schmidhuber's point or motivation. However, it is possible that the pattern he attributes to poor credit assignment is a side-effect of a field-wide shift towards less disruptive work.

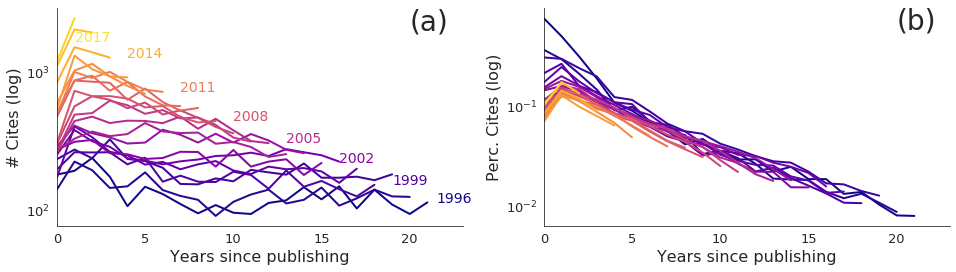

Just for fun I re-analyzed some of the data from Microsoft's NeurIPS Conference Analytics post from late 2018. In the figure below, I show two different views on the data from their “Memory of references” section. From this, it appears (to my opinion, no rigor going on here) that if there is a general trend in the field it would be a very gradual one as the overall drop-off in references to older papers is extremely consistent over the years.

A side note on the top image

Wu et. al. also produced some interesting plant-like visualizations of the citation graphs related to particular papers. Indeed, the desire to play with this sort of visualization was the actual motivation behind this post, although a group at Autodesk also did a similar visualization of a group of papers back in 2012 which look better than both. The top image shows a partial reference subtree for my paper on IQN. The plot was constructed by (gently) scraping data from Semantic Scholar and recursively following links to subsequent references, and their references while avoiding duplicates. At depth one, just the immediate references, this gives 46 papers. At depths two and three this balloons to 631 and 4638 papers respectively. The location along the horizontal corresponds to publication date, although I took some artistic liberties with the scaling.

Impactful or incremental; disruptive or developmental

Back to the introspection! I started by talking about the number of ongoing projects, and instead ended up on the topic of team-size. We might hope to do impactful/disruptive research with many small-group projects in the air at once. And, indeed, this feels a lot like the academic model, where you might have students with their own projects which you work on, or provide guidance for. But, as the number of projects grows your individual contribution neccesarily shrinks. I see a lot of good models of this style of researcher in our field, but fewer where a more senior researcher focuses mostly on a small number of, potentially, disruptive projects. Does that mean the former is the path we should all be aiming for? I really hope not.